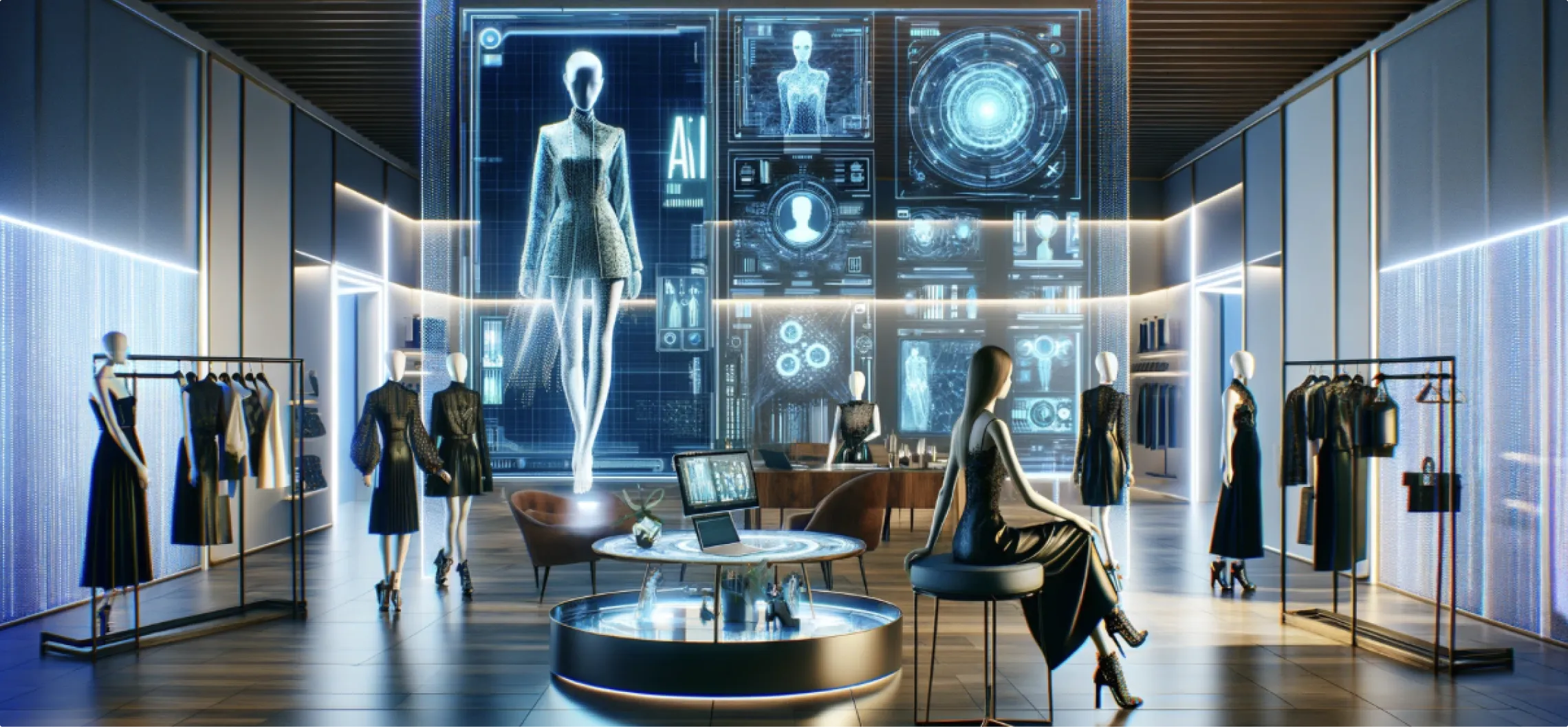

Bridging The Gap ft. Motion Capture

Star Sports, Airtel

Motion Capture

For IPL 2023, IIC got the mandate to design an unprecedented experience for sports enthusiasts across the country- the Airtel Ultimate Fan, entailing a real-time interaction between Cricket Stars and avatars of Cricket fans. This was done for the first time in the history of the Indian broadcast industry. The fans were beamed from 10 different locations across the country and were transported directly to the Star Sports Studios where they conducted live interactions with their favorite Cricket players.

First-ever real-time interaction between cricket stars and fan avatars during IPL 2023

Avatar of fans from 10 locations from across the country engaged in live interactions

A Product of Extensive Research

When our team first learned about this project, a surge of excitement coursed through as we were subconsciously aware of the numerous hurdles that lay ahead of us, owing to the fact that a real-time interaction between a virtual avatar and a human had never been done on Indian Television before so we had almost negligible reference points and hence the comprehensive R&D followed.

All About Photorealism

The first initial challenges involved establishing industry-leading motion capture (mo-cap) and photogrammetry pipelines. After conducting trial & error with depth-based photogrammetry and various online plugins to create 3D models & facial textures, we finalized an extremely technical photogrammetry pipeline incorporating 12 camera setups and reality capture software

Photogrammetry is a three-dimensional measuring technique utilizing photographs as the foundation for metrology. Employing this technology, we executed a comprehensive 360-degree scan of the fans, generating intricate 3D face mesh data and photorealistic facial textures. The photogrammetry pipeline was set up employing three strategically positioned cameras, capturing top, mid, and low angles to precisely scan the face, head structure, skin texture, and facial features of the fans. This was followed by creating a customized studio, designed notably to meet character and interaction design specifications. Studio fabrication encompassed the scrupulous design of side panels and flooring, ensuring an immersive backdrop for the avatar interaction. The process started with capturing over 150 photographs of the fans from multiple angles using DSLR cameras. To ensure technical precision, post-processing on the raw image data was performed, adjusting the exposure, reducing the highlights, and enhancing the shadows respectively.

Avatars Coming to Life

Subsequently, we leveraged reality capture software to breathe life into the scanned photographs, transforming them into cohesive 3D meshes. Throughout this process, we gained invaluable insights into optimal camera settings, studio lighting configurations, and the essential steps of alignment and reconstruction within Reality Capture. Yet, one of the most demanding aspects of Photogrammetry proved to be generating accurate facial textures that seamlessly matched the fans' visages. This involved extracting the image dataset and undertaking meticulous cleaning and painting tasks. The intricacies of hair were addressed using the Maya XGen tool, coupled with Substance 3D Painter and Photoshop for texture enhancement. Binding the hair to the Digital human character mesh in Unreal was a critical step, followed by meticulous simulations to ensure realism and prevent any penetration issues. Our iterative process included adjustments within XGen to address any identified concerns, resulting in seamlessly flowing hair that adhered to the laws of physics and retained a natural aesthetic. The clothing creation pipeline involved leveraging CLO3D. Starting with the character mesh, we tailored clothing pieces - pants, shirts, jeans, t-shirts, and more - ensuring a tailored fit and detailing. Subsequent steps included retopology in Blender for mesh optimization, Substance Painter for intricate texturing, and finally binding the garments to the Metahuman skeleton in Unreal. The application of physics further perfected the natural draping and interaction of the clothing.

Replicating Human Emotions to the T

Once the Photogrammetry and texture generation workflows were refined, we directed our focus towards facial and body motion capture. Harnessing the Xsens mo-cap suits and advanced software solutions, we progressed to this crucial stage of our pipeline. For realistic facial expressions capture and finger movements, faceware mp5 tracking along with a multi-finger tracking system using the Manus finger tracking system was employed. This faceware enabled us to capture the fans’ live facial expressions, precisely translating them into a digital database through cameras and mo-cap technology. The Faceware tech also facilitated live eye tracking, computer facial animation, and expedited work processes by circumventing traditional animation techniques. Our utilization of the Faceware Studio software application further augmented real-time facial performances. Throughout this process, we made ourselves familiar with faceware setup intricacies, software workflows, and calibration techniques, ensuring optimal tracking data quality.

Diving Deep into MoCap Workflows

Having mastered facial performances, our next hurdle revolved around translating these expressions into seamless Metahuman facial animations. Through extensive R&D, we delved into the intricacies of mocap workflows and the non-real-time technical processes of animation software. Analyzing facial features, tracking data, and parameterizing performance files paved the way for transferring data to subsequent animation software. While Faceware technology greatly reduced manual animation processes, it fell short of providing 100 percent accurate data, necessitating meticulous cleanup of facial animations. Consequently, retargeting mocap face skeleton data to Metahuman face skeleton data became an arduous endeavor. To streamline this process, Autodesk Maya proved to be the primary tool for animation cleanup. The cleanup phase entailed rectifying anomalies such as marker occlusions and jittery movements within the animation. Retargeting and refining animation proved to be one of the most formidable challenges we encountered which we ultimately combatted resulting in true-to-life facial animation outputs.

Calibrating for the Win

Our pipeline workflow extended beyond facial animation, as we advanced to our next task of calibrating and retargeting body motion capture to Metahuman using Autodesk Maya. Although Motionbuilder represented the industry-standard animation software, our objective centred on replacing it with Autodesk Maya for animation cleanup and retargeting of mocap body skeleton data to Metahuman body skeleton data. Countless obstacles, bugs, and T-pose errors obstructed our path. Through rigorous research, testing, and trials, we eventually succeeded in retargeting mocap data to Metahuman using HumanIK. This marked a significant milestone, solidifying our expertise in body motion capture animation workflows.

Post-Processing & Real-time Rendering

Next, we navigated the terrain of bringing post-processed animations into Unreal Engine and implementing them seamlessly onto the Metahuman. Initially, we encountered obstacles such as incorrect animation playback, face-body detachment issues, and distortions within Metahuman. This was tackled through skillfully fine-tuning materials, optimizing lighting setups, and ushering forth enhanced quality mo-cap animation outputs in the Unreal Engine.

The process also involved real-time animation synchronization across diverse locations through a VPN-enabled Live Link. Seamlessly connecting character and animation, this mechanism allowed characters to seamlessly respond to triggers activated remotely. The bridge created by Live Link enabled a unified experience, transcending physical boundaries and harnessing technology for a cohesive narrative.

Contact Us Now:

.CNhas5IL_ZqBJiz.webp)